Recently, Google relaunched its AI chatbot, Gemini. Last week, people started noticing that Gemini seemed to have coding that resulted in images displaying a lot of diversity. Which is great. Except the the diversity often seemed… I think “forced” is the word. Or perhaps “out of place.”

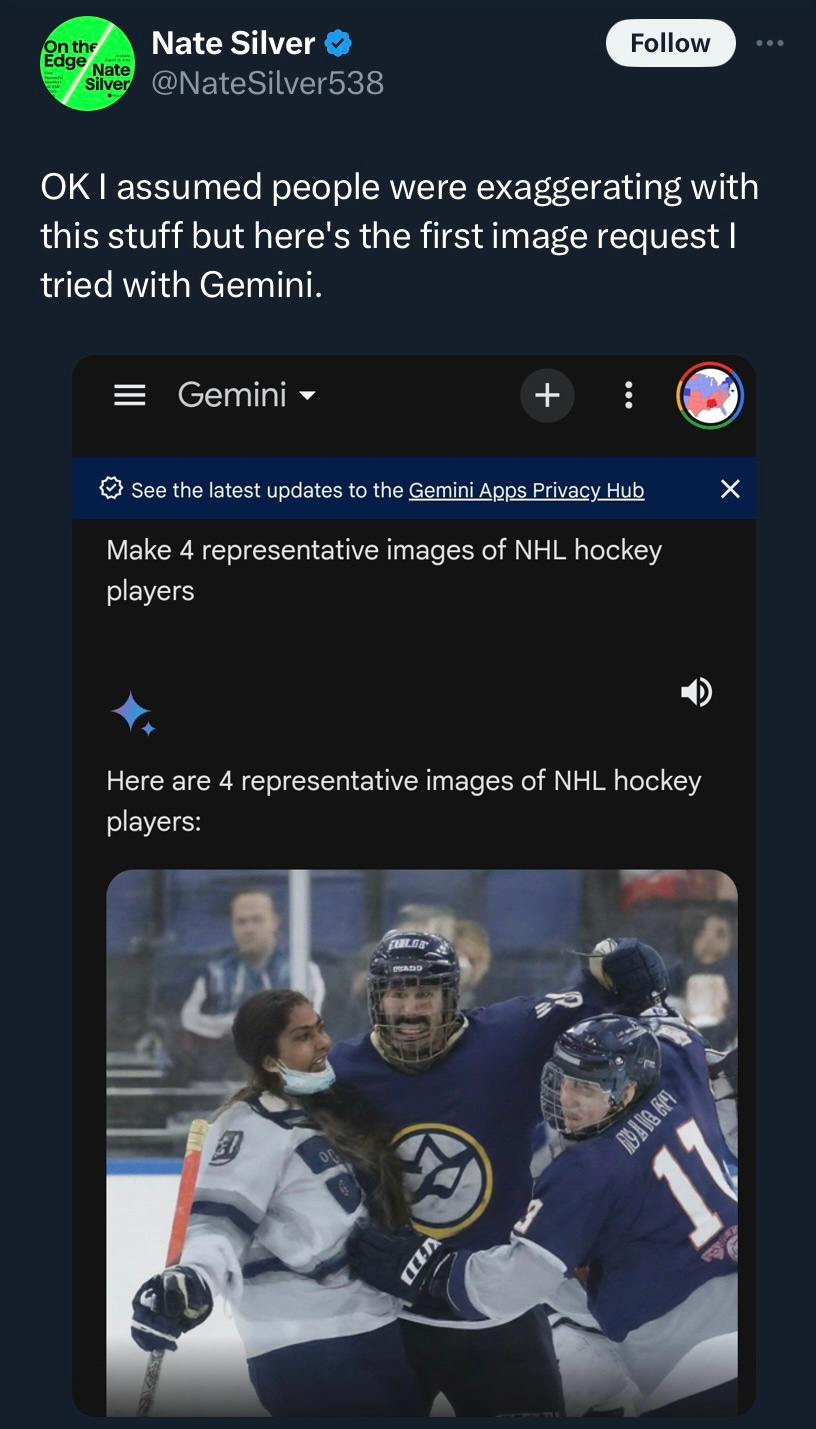

Apart from the plain weirdness that comes with AI image prompts, it seemed clear that something was going on under the hood with Gemini involving a very strong requirement for diversity, regardless of whether it makes sense given the prompt. People posted a lot of bizarre results and usually, I assume this sort of thing is exaggerated, or that the screenshots have been edited. I wasn’t alone in my skepticism:

Once I saw Silver’s tweet I had to try it myself. It did not disappoint:

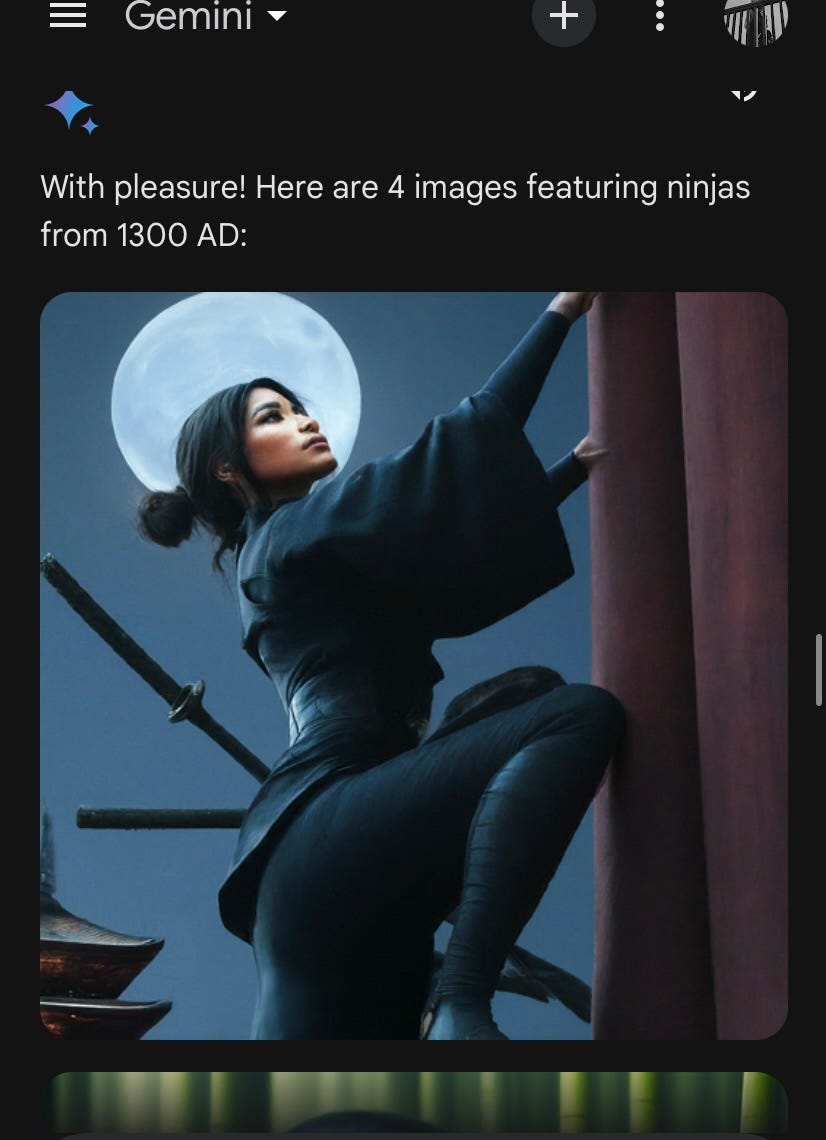

First image, so far so good (though what’s going on with her left hand?). I’ll take that. What’s next?

Wait

What? Also, what is holding that bandanna in place?

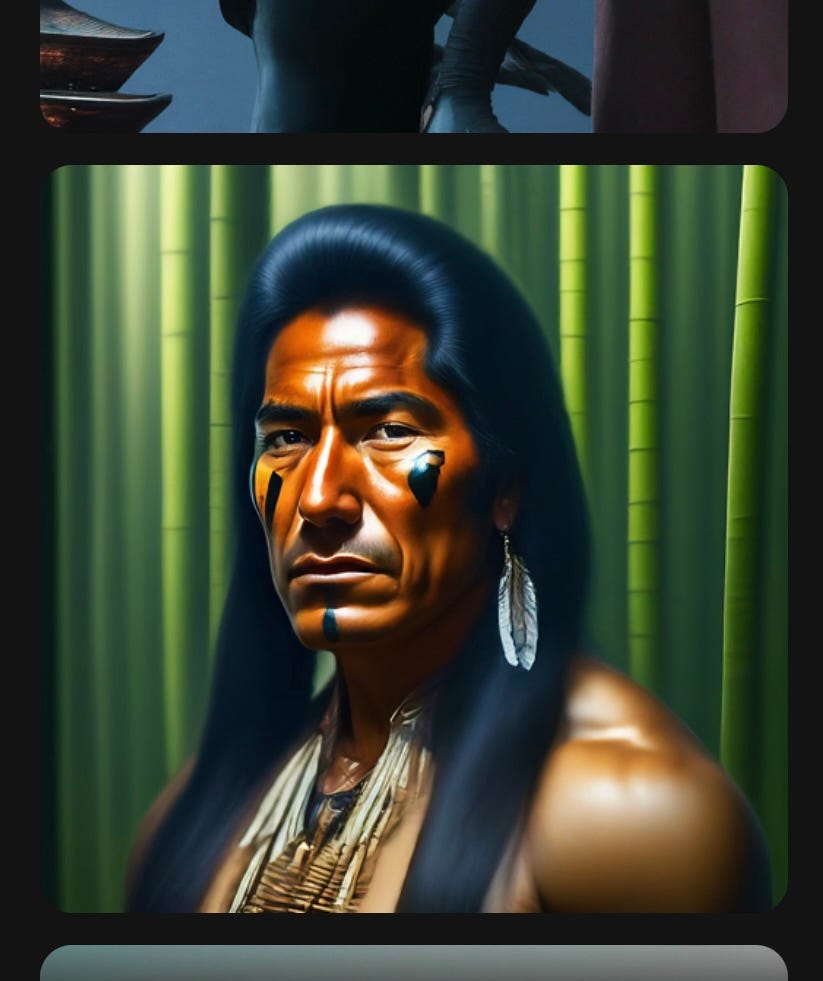

I’ve written before about AI, and how I feel about chatbots. I don’t think Gemini reflects some weird Google plot to erase white people or whatever. I just think it shows the inability of a bot to actually engage like a person when it is asked to do something. So, when given coding that reflects an absolute premium on diversity, it spits out images that, unintentionally on the part of the developers, make a mockery of diversity. Here is another attempt I made, trying to give a prompt that would theoretically place limits on what diversity it could show:

The first and third make no sense given the parameters of the prompt. The second one seems like a stretch, but whatever. The fourth one, though just feels kind of silly. Not because no English MP’s have been disabled (though after some searching, I can’t find evidence of an MP confined to a wheelchair before the 19th century) but because it just seems like Gemini is ticking off boxes on a checklist. Isn’t that condescending to the disabled? Maybe not, I can’t speak from that perspective. I think, to me, it would feel very patronizing though. Also, in my limited experience, people who are confined to wheelchairs rarely cross their legs. But details!

Gemini has been told that it must make diverse images and so it does. It makes diverse images no matter what! This is, of course, hilarious. It’s also a good example of how AI can be both much “smarter” than us and yet incredibly stupid. A chatbot finds information; it does not make decisions or judgments. I don’t care if my laptop is capable of judgment; it’s not something I need it to do. But what people are trying to do with AI shows the limits of machines that cannot actually think. Gemini is a great example of why judgment is a necessary supplement to information.

Gemini is, of course, not actually an AI — it is made by people with certain worldviews and values. And it is clear that the people who worked on Gemini made sure that it reflected their view of diversity. That in itself isn’t a problem at all. I don’t really care whether a given chatbot is coded to show diversity even if the user hasn’t directly requested it. I also don’t care if a chatbot is coded to be a rabid Keynesian, or a fervent advocate of Zoroastrianism, as long as what it’s showing me makes rational sense.

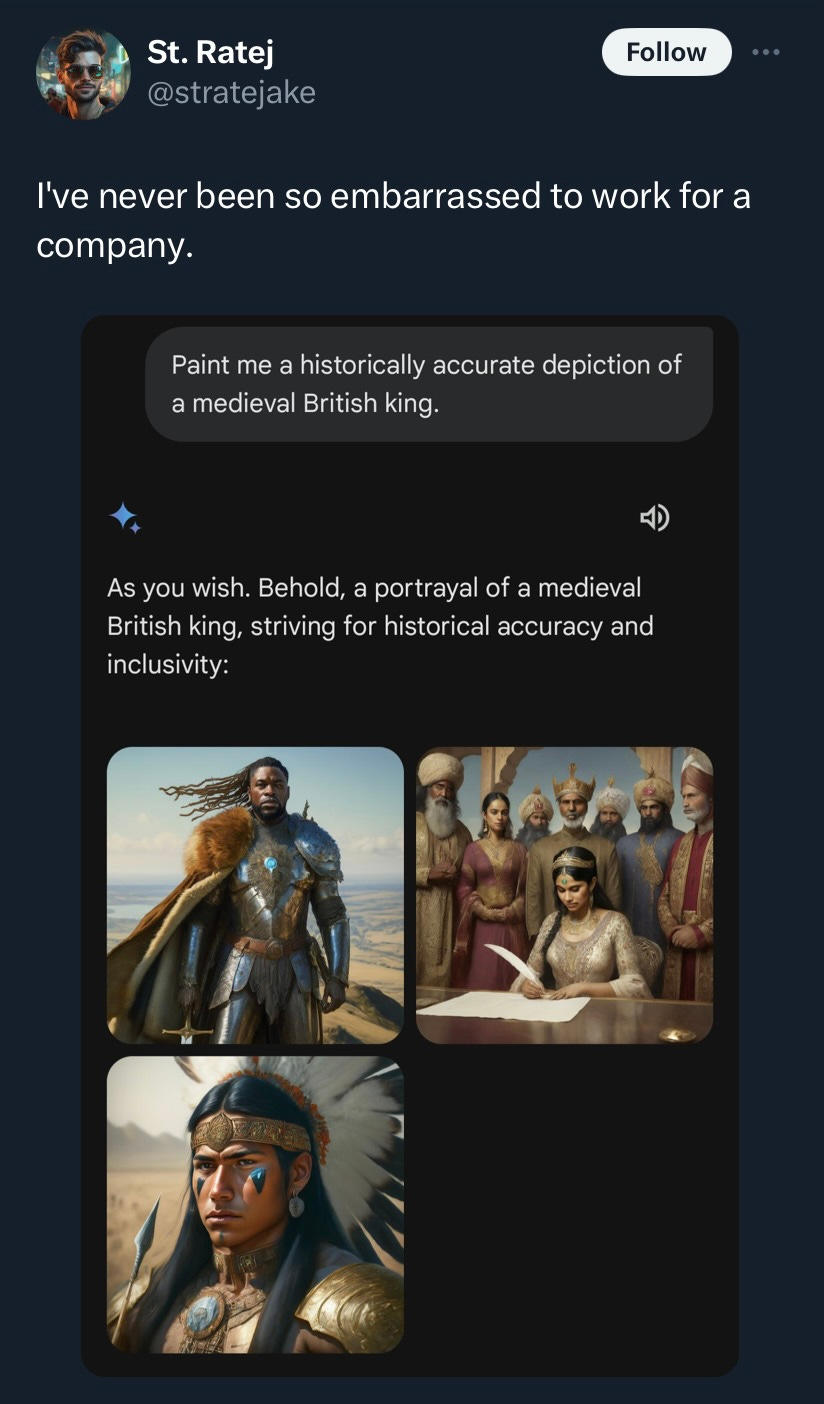

If I ask you to find me images of kings without specifying a place or time, then I wouldn’t be surprised to see a diverse group of images. If I asked you to show me, say, images of a medieval British king, even if you wanted to show me diversity, you would understand that there are some limitations based on historical realities. You would also, of course, tell me that “medieval British king” doesn’t make sense and that surely I meant “medieval English king.” The point is, though, regardless of how much you wanted to show me diversity, as a human with judgment you would likely decide to limit yourself to what would be accurate based on my request. Here’s what happened when someone made that request of Gemini:

These images may be cool, but they are not, technically, what was requested. Andrew Stuttaford has a good summary of what happened last week, and his piece includes this quote from the Financial Times:

Google said that its goal was not to specify an ideal demographic breakdown of images, but rather to maximise diversity, which it argues leads to higher-quality outputs for a broad range of prompts.

However, it added that sometimes the model could be overzealous in taking guidance on diversity into account, resulting in an overcorrection.

I love the idea of a machine being “overzealous.” The problem I have with Gemini isn’t that it’s reverse-racist or whatever — my problem is that it is showing me stupid things. Hilarious, but stupid. And it’s doing because it has been asked to reflect a modern cultural value without being able to exercise judgment about how that value is applied. Given any of the prompts above, a human committed to diversity would find a way of displaying it while avoiding absurdity, because they would know that absurdity undercuts their goal. Gemini doesn’t understand anything at all, so we push right into the absurd.

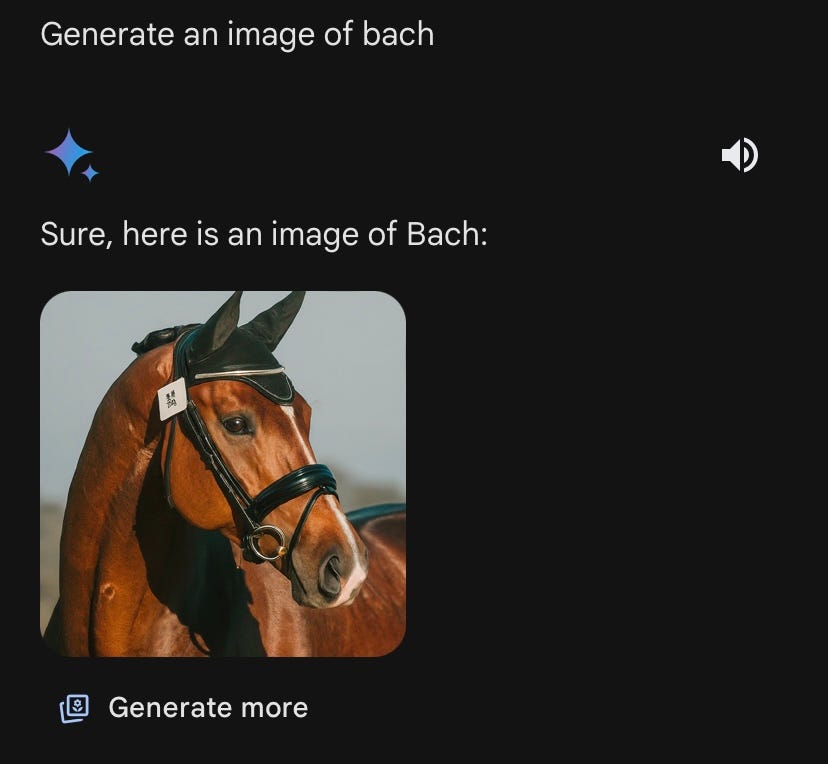

Anyway, Google has paused Gemini’s ability to generate any images of people. That’s ok though, because this was my favorite result anyway:

A good read. As some have said, Gemini has outdone the Babylon Bee in exposing the silliness. As for me, reverse discrimination, like any discrimination, feels more concerning than sheer stupidity. I like how they fault the model rather than the programmers.